Is Google Translate good enough?

Machine translation continues to evolve. With artificial intelligence in the mix, machine translations seem almost human. Google Translate is one of the top players in this market, supplying everything from basic text translation to browser-embedded (Chrome) translation to a robust translation API. But even with all of these options, is Google Translate good enough?

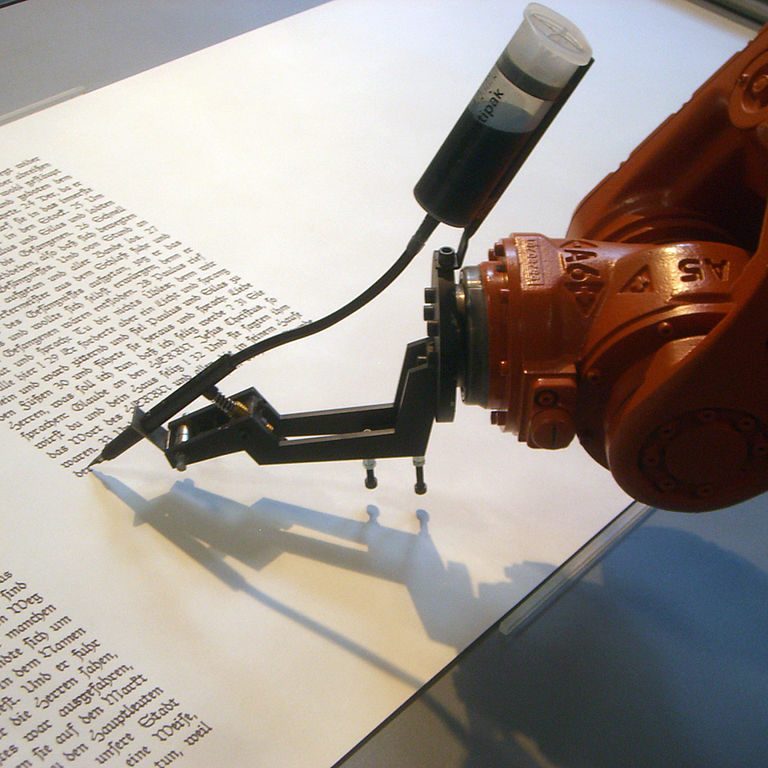

Make sure the machine is representing you appropriately! (image source: Flickr/Mirko Tobias Schäfer)

Successful translation, machine-generated or otherwise, still depends on three core content facets: audience, subject matter, and quality.

Audience

Audience expectations matter. Do your audiences expect content tailored to them, or are they accepting of potential translation flaws?

Your audience may be more forgiving of translation errors or awkward phrasing in general content. They may be less forgiving (or insulted) if instructional or targeted content contains obvious flaws.

Regarding Google Translate, using their API to directly translate and publish web content might be good enough. But if your audience expects tailored content, additional steps are needed in the translation process to ensure the content speaks to them.

Know your audiences’ expectations before deciding on your translation approach.

Subject matter

The subject matter of your content also affects machine translation accuracy. Will machine translation tools understand your terminology?

Machine translation tools are only as smart as they are trained to be. If you use special terminology, or if your content is highly technical and must be absolutely accurate (such as with life sciences), you must train the tool to understand this content.

Use a corpus of your content to train the tool how to appropriately translate your content into other languages. This process is neither quick nor easy, but is essential for accurate machine translation.

Content quality

Content quality is always important, but it is critical in the case of machine translation. Are you building quality control into source content development? Do you strictly enforce style, terminology use, and content structure?

Machine translation is most successful with content that is consistently written, using specific terms and phrases in specific contexts. Frequent use of synonyms in varying contexts can confuse machine translation tools. Likewise, inconsistent content structure can result in different translations for similar strings of text.

Slang, colloquialisms, and jargon are also problematic. The machine translation tool must be trained to understand these constructs and equate them to culturally appropriate localized phrases. If not, the translations will be literal, which could be embarrassing or downright offensive.

If stylistic inconsistencies, slang, colloquialisms and such cannot be avoided, consider using human translators and employ transcreation where culturally appropriate translations of more colorful phrasings are needed.

So, is Google Translate good enough?

Yes and no. It really depends on how you intend to use it, and whether potential inaccuracies are allowable.

If your audience does not need or expect a 100% accurate translation, then Google Translate may be good enough. If accuracy is critical, you may need to look at other translation options, or at least build in a thorough review process and custom corpus maintenance into your use of neural machine translation (NMT).

Richard Hamilton

I agree with your assessment that audience, subject matter, and content quality are critical in determining the odds of getting a good machine language translation. However, I would add language to that mix. My experience with Google Translate is that it works much better with some languages than others. I use Google Translate frequently for Chinese (I work with an organization that uses Chinese for a lot of internal communication), and I find that the results (Chinese to English) are often complete nonsense. I’ve seen this happen with content that is not particularly technical or difficult. And even when the results can be mostly understood, they are often ambiguous.

I wonder (with no firm proof:-) whether the problem has to do with Chinese being a high-context language (a lot of meaning is left to be understood from context) and English being low-context (things are spelled out more explicitly). An algorithm going from Chinese to English needs to infer more information, and that can be difficult and error prone.

In any case, I’m only comfortable with automated translation when it is requested by the reader and used with the reader understanding the potential pitfalls. I would never give a reader machine translated content unless that translation has been reviewed and, if necessary, edited by a native speaker.

Bill Swallow

Well, not to get too into the weeds, but there are several levels of “Google Translate”, ranging from deep neural processing (NMT) to Ye Olde brute force statistical phrase matching. And these vary by language and translation service (web form, browser plugin, API, etc.).

But… for Chinese, all services should now be using NMT. My guess is that you’re running into colloquialisms and such. (Yes, it’s a highly contextual language.) Also, are you selecting the correct flavor of Chinese? My guess is yes, particularly if using auto-detect, but always good to check.

Again, it’s not a perfected technology, but it’s getting there. And I can’t “like” this comment enough: “I would never give a reader machine translated content unless that translation has been reviewed and, if necessary, edited by a native speaker.”

Marc

I recently tested a new machine translation service called DeepL. At least for English to German translations (that’s what I tested) the results were stunning and much better than those from Google Translate. It still wasn’t 100% perfect, but really remarkable.

Bill Swallow

DeepL made a big splash when they launched, as they were a very small company competing with the likes of Google and Microsoft in the neural machine translation market. There isn’t a lot of information about them (they hold their cards close), but they do support seven languages to date.

Mike Unwalla

If the source text is clear, I think that MT can be sufficiently good for many uses.

In a small project between 2009 and 2011, translators evaluated the results of translations from Google Translate. The results for fluency and accuracy are on http://www.techscribe.co.uk/international-english/mt-evaluation.htm.

The machine translation from English to Welsh was not good. An expert at the Language Technologies Unit, Bangor University, said that Google Translate gives better translations from Welsh to English than it does from English to Welsh. He thinks that some of the reasons for the difference are as follows:

* Google has a very good model of English. Probably, the model for Welsh is simple in comparison to the model for English. Google Translate uses a statistical method to create a model, and most of the bilingual text comes from Welsh Assembly’s records of proceedings.

* Welsh has a more complex word structure (morphology) than English. Therefore, translating from Welsh to English is easier than translating from English to Welsh.

Bill Swallow

Thanks for the link! This appears to be before the introduction of neural machine translation, but the numbers are interesting.

Regarding Welsh, I’ve never worked with the language myself. But, I wager you’re right regarding the difference in complexity in translating English info/from Welsh.

Rosemarie

I’ve tried to use Google Translate to go from English to Polish with horrifying results. More often than acceptable, a *negative* was omitted from a sentence. That’s kind-of important! It also frequently mangles personal pronouns and gender. Luckily, I only use it for inter-office communications, where the recipient understands that I am still something of a student in the language.

Bill Swallow

Hi Rosemarie,

I think the web form still uses phrase-based MT for Polish, which is less than optimal. The (paid) API does provide NMT for Polish <> English.

Tim Slager

I think the key question is, How soon will it be good enough? From there we need to know how that will affect our processes.

Bill Swallow

A song from The Smiths comes to mind (How Soon Is Now)…

I’d say in some cases, soon *is* now. It depends on what you need, which begs the question, “What is the definition of ‘good enough?'”

The API provides MANY more NMT pairings than the conventional web service, but the results are still not 100%. But are the results “good enough?” They might be if you don’t need 100% accuracy, or are OK with doing an editorial pass on translated content (which is always a good idea regardless how how content is translated).

Joseph

Hello Bill,

Good post.

Google Translate is good but sometimes it translates words which do not actually work in real life in terms of pronunciation. I have a Spanish friend and I often talk with him by using Google translate. Sometimes it works properly but sometimes AI is simply unable to translate words which I can found useful. Content quality always defers time to time even the same I practically experienced for my work. It’s a good effort but needs some more efforts to develop.

telef

sometimes Google throws up texts that are not in your text also. sometimes comes up with wierd texts

Maitri Meyer

I’m looking online and in your article and comments for the acronym NMT. What does it stand for? What does it mean? Sorry you lost me on that term.

Bill Swallow

Sorry Maitri, I thought I’d defined it! I’ll update the post.

Maitri Meyer

Nevermind I finally found it! Neural Machine Translation! https://en.wikipedia.org/wiki/Neural_machine_translation